Programming the cloud

ought to be delightful

This essay presents our new approach to cloud computing. It's much simpler than the status quo: deployment is done with a single ordinary function call, services can call each other as easily as local functions, and storage is as easily accessible as in-memory data. Entire classes of tedium disappear and you can now focus on the important work of your actual business logic.

Building for the cloud today isn't just complicated… it's bizarre. Why?

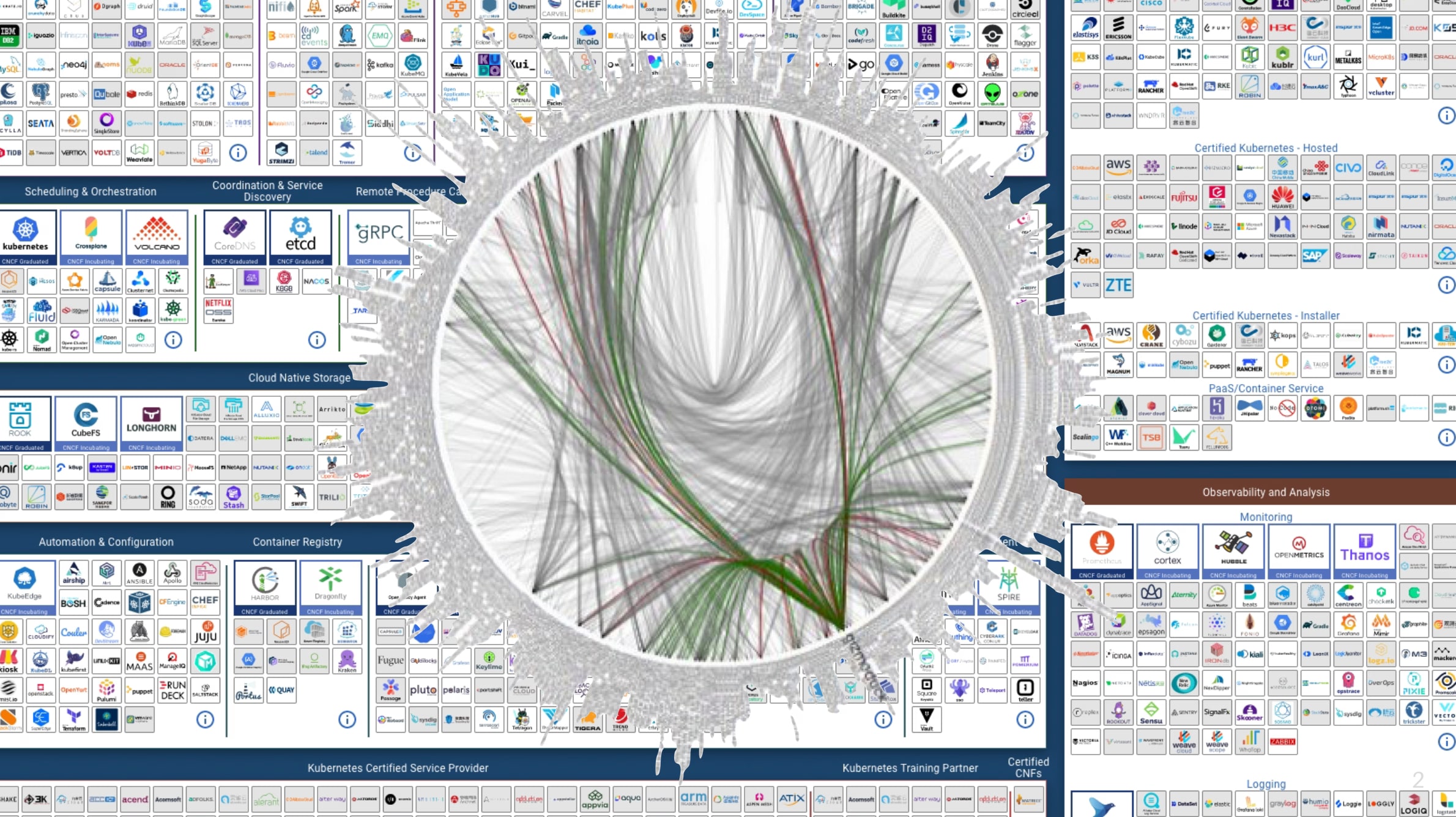

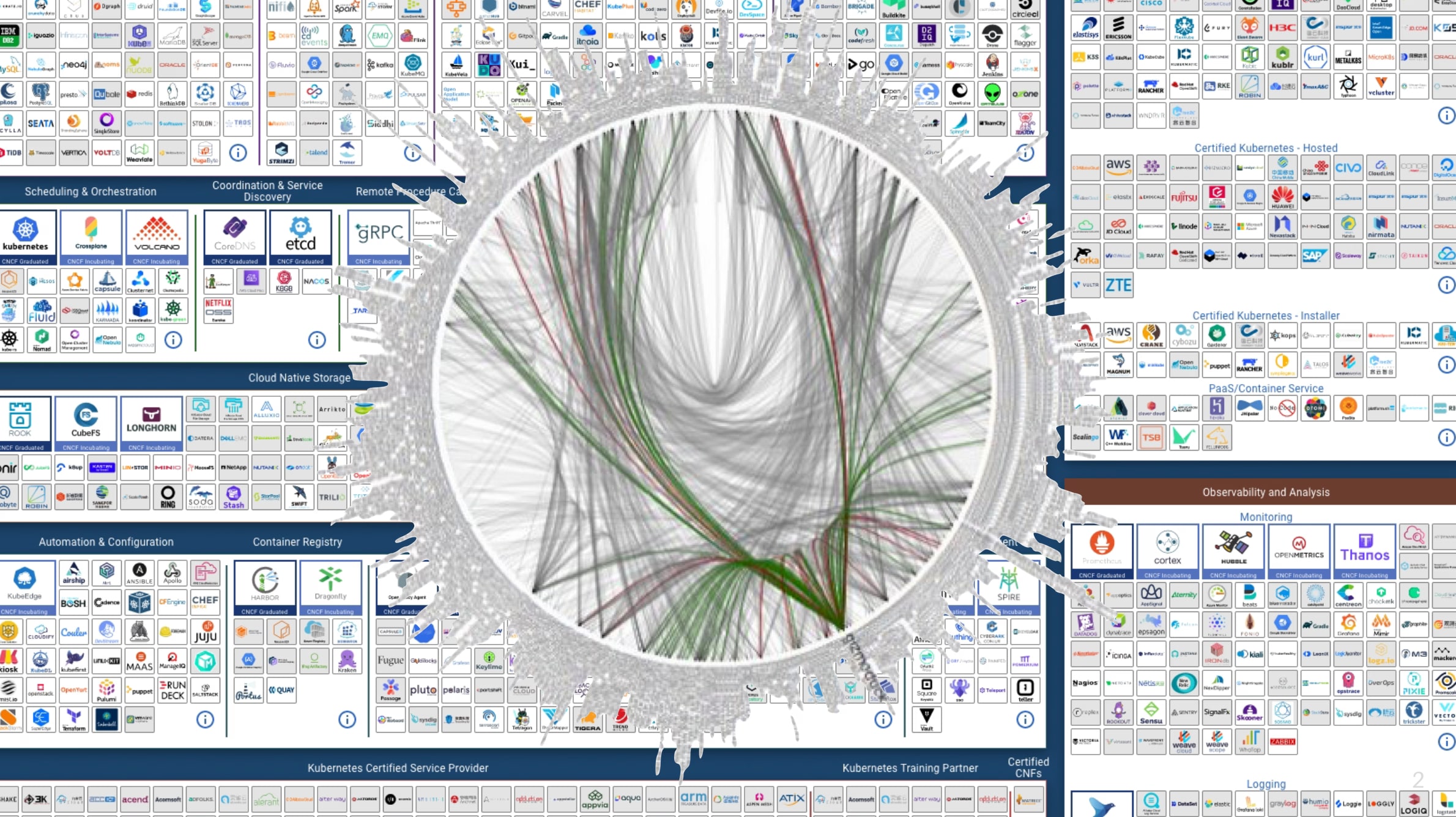

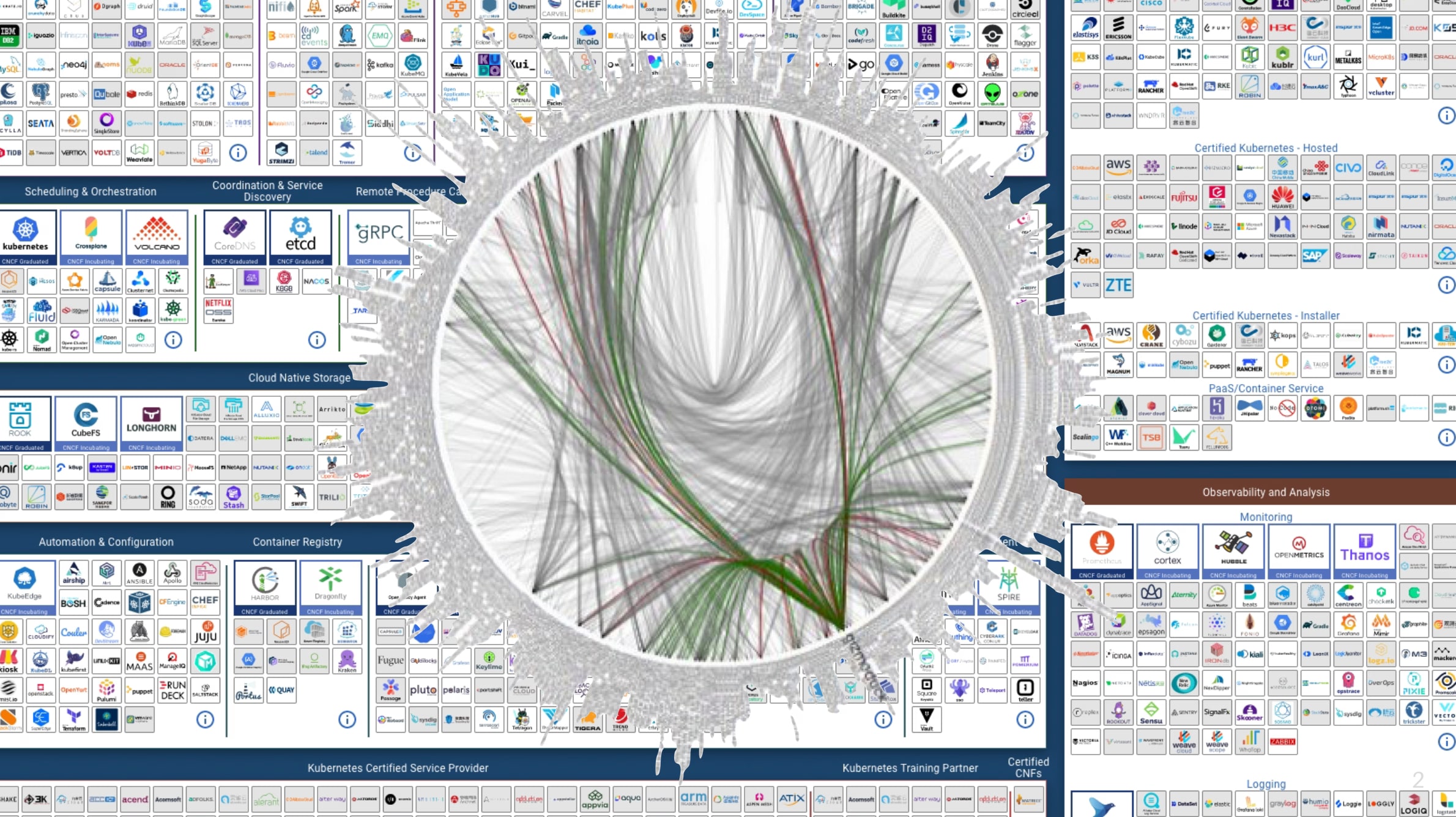

These days, if you want to build a system in the cloud, you might end up assembling a contraption like this:

Building and operating a system like this is complicated, even if you don't have a gajillion microservices. For instance:

- Services need to be packaged up and deployed.

- Service instances need to be scaled up or down, with failures handled gracefully.

- Any communication path between services generally requires some ad hoc wire format that must be encoded and decoded on either side of the network boundary (converting to and from blobs of JSON or Protobuf, say).

Access to storage similarly involves some layer of boilerplate reading to and from SQL rows or JSON blobs in some NoSQL store or durable queue.

In the background of the Death Star, there's all those boxes, a growing deluge of "cloud native" technologies you're supposed to use to assemble the Rube Goldberg machine of your overall system.

It's complicated. And it's complicated in a very strange way: a lot of the work you end up doing is not programming.

Odd: a lot of the work is

'not programming'

Let's compare the following tasks. If you have, say, a list of things in memory on one computer and you'd like to sort them, what do you do? You call a function in your programming language. This experience of "ordinary programming" is nice in so many ways:

- You call functions with the same uniform syntax everywhere.

- Function calls are typechecked. (Statically hopefully!) You find out before running the code if you're passing the function the wrong sort of arguments. (Even in a dynamically-typed language, there's at least uniformity in the dynamic typing rules and how those are reported.)

- You can introduce new functions and new abstractions. Composition and code reuse are easy.

In short, all the amazing tools our programming languages have evolved for managing the complexity of describing computations are at your disposal, and life is sunshine and rainbows.

But when building a system in the cloud today, there isn't just regular programming happening. There's a whole other strange class of activities, accomplished not with function calls in your programming language, but with a random assortment of YAML engineering and bash scripts and other gobbledygook.

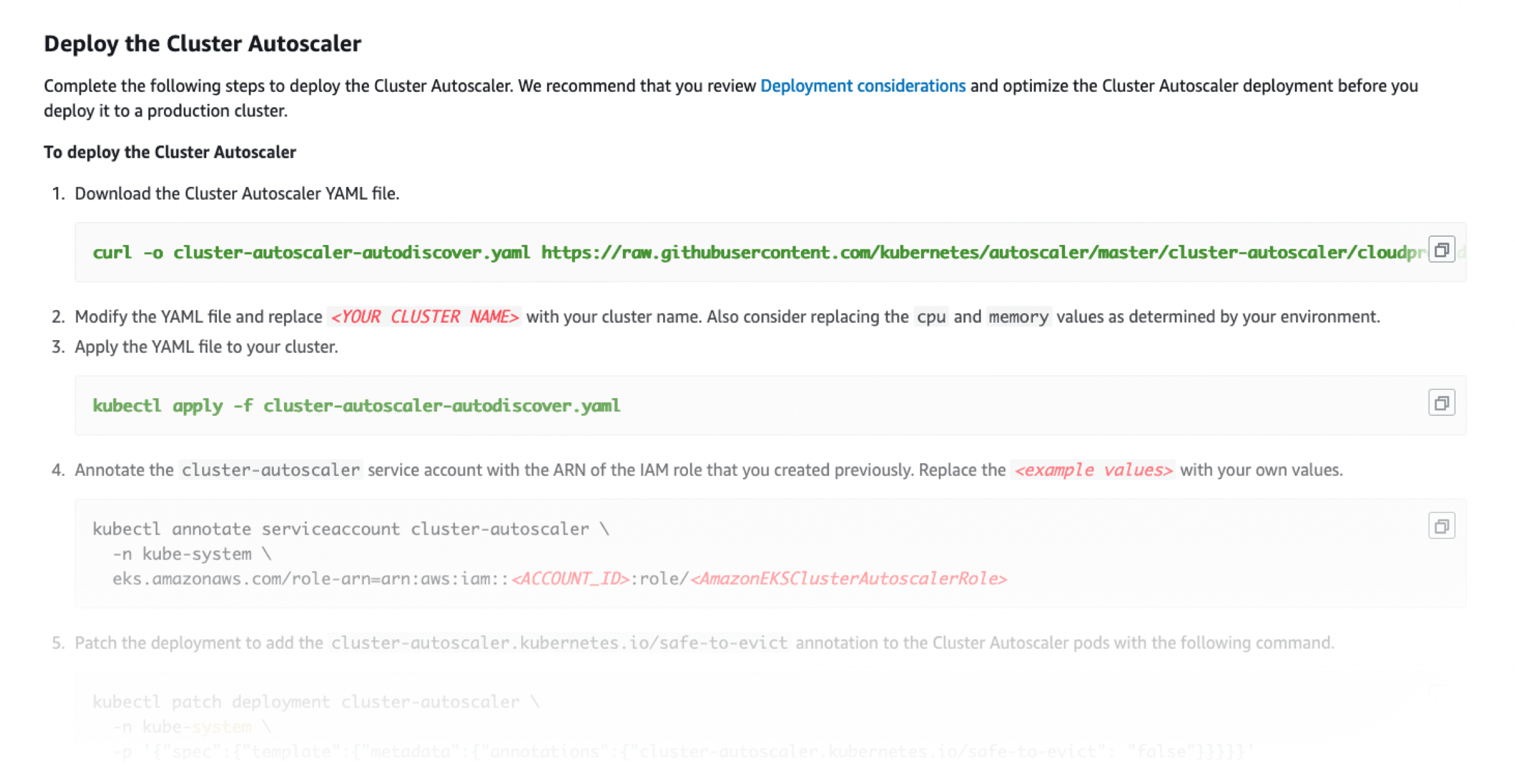

For instance, suppose you'd like to connect an autoscaling group to your managed Kubernetes cluster. Instead of a simple function call, you have some unguessable nonsense that starts with a curl command to fetch the "cluster autoscaler YAML file" and goes downhill from there.

Honestly, what is even happening here? Why is there this strange bifurcation between the beautiful, coherent, logical world of ordinary programming, and the garbage fire of assembling and operating a system in the cloud?

It's a good question that we'll come back to later.

A dream in 3 parts

But for a little while at least, let's put aside the negativity and instead imagine: what is the dream? How should cloud computing be?

First, deployment should be as simple as calling a function. No more packaging or building and shipping around multi-gigabyte containers and uploading them to the container registry whosiewhatsit, then configuring the something something on the Kubernetes thingamabob. Good grief, no! Instead, imagine deploying services or batch jobs with a single function call in your programming language. And then just like that, in seconds, your service is live in the cloud. Can it be that easy?

The Dream

Deploy with a function call

No packaging or building containers.

Containers are an implementation detail! Your service or batch job discusses your application's domain and business logic. This is what's valuable and interesting, and it's defined in the beautiful sunshine and rainbows world of ordinary programming, in a real language. This should be your focus. If containers are somehow involved in getting things running in the cloud, it should be as an implementation detail you can avoid thinking about. (There's also electrons moving around in various complicated ways when you deploy software to the cloud, but luckily you don't have to think at that level of abstraction!)

The Dream

Service calls are 1 line of code

No converting to and from JSON blobs or protobuf. No explicit networking.

Part 2 of the dream is that calling services should be easy. Perhaps a single line, and no needing to encode and decode arguments or results or do explicit networking. Moreover, remote calls should be typechecked! In short, it should be about as easy and convenient as a local function call.

That said, we do want our type system to track where remote calls are happening, allow us to catch errors, make such remote calls asynchronously, and so forth, but all these things can be accomplished without a pile of brittle boilerplate code on either side of the service call boundary.

And lastly, part three of the dream: it would be great if accessing storage from our services or batch jobs were as easy as accessing in-memory data. Imagine: no more boilerplate converting your domain objects to and from rows of SQL or blobs of JSON.

The Dream

Storage is typed, too

As easy as in-memory data structures.

The dream is possible! We have the technology.

All these things and more are possible. We just need to build on the right foundations! 🤔 Yet what are those foundations? Let's examine all three parts of the dream in turn.

Part 1: How to deploy with a function call

We usually think of "deployment" as something that happens out of band in a CI pipeline using some horrifying combination of YAML files, bash scripts, glue, and duct tape. Setting up, testing, debugging, and making changes to one of these Rube Goldberg machines is… unpleasant. What would be so much better is deployment via an ordinary function call in your programming language, like so:

deploy myService

There it is, a call to the

It's not trivial. We have three difficult requirements to meet.

First, let's notice that

That's not quite enough though, because

But even this isn't quite enough, because we can certainly imagine that wherever we're deploying to has a conflicting set of dependencies. Thus, our third and final requirement: some way of eliminating dependency conflicts as a possibility.

Requirements for the dream

All things serializable

Enumerable dependencies

No dependency conflicts

Most programming languages can’t do these things

Learn how Unison does it

Let's look at a little example:

factorial n = product (range 1 n)

myService = ... (factorial 9) ...

Suppose we have

One approach that doesn't work is just referring to functions by name. The name "factorial" could refer to various definitions over time, as libraries release new versions. The deployment destination may have a conflicting set of library dependencies which already assigns a different meaning to that name.

Another approach which doesn't work is to "somehow" set up "your entire cluster" in advance with an agreed-upon set of dependencies. Not only is this out-of-band setup step tedious and annoying, involving the very gobbledygook of containers and YAML files and baling wire we're trying to avoid, it's also inherently brittle and unscalable. Few modern systems can get away with requiring that all nodes update their dependencies together in lockstep, and operating a monolithic system that tries to accomplish this without downtime is itself extremely complicated.

Unison takes a different approach: functions are identified by hash, not by name:

factorial#a82j n = product#92df (range#b283 1 n)

myService = ... (factorial#a82j 9) ...

That is, factorial will have a name "factorial" for when we're

showing the code to a human, but it will be uniquely identified by

it's hash

Thus, a Unison hash uniquely identifies a definition, and pins down the exact version of all its dependencies.

This in turn gives us a simple protocol for deploying code at

runtime with a function call. The destination can keep a code cache,

keyed by these hashes. On deploy, the destination can check if it

has all the dependencies of

Moreover, this can all be done with ordinary library code, since the Unison language provides all the necessary building blocks.

With all this solved, you can indeed deploy services with an ordinary function call. Here's some actual Unison code. It takes just a few seconds to deploy, and it's live in the cloud:

helloWorld : HttpRequest ->{Exception, Log} HttpResponse

helloWorld = Route.run do

ok.text "👋 Hello, Cloud!"

helloWorld.deploy : '{IO, Exception} ()

helloWorld.deploy = Cloud.main do

h =

Deploy to the cloud!deployHttp !Environment.default helloWorld

ServiceName.assign

(ServiceName.named "hello-world") h

Deploy to the cloud!deployHttp !Environment.default helloWorld

ServiceName.assign

(ServiceName.named "hello-world") hhelloWorld/main>

If you're unfamiliar with Unison syntax, that's okay. The important

bit is the highlighted line, which calls the

In this example, notice that deployment returns a hash-based URL, uniquely identifying the specific version of the service. Unison Cloud lets you assign names to these hashes to provide stable human-readable URLs for a service backed by different versions over time. This separation is also handy for things like staging deployments and integration testing, which can always hit the hash-based URL before "promoting to production" by assigning a name to that hash.

Part 2: Easy typed remote procedure calls

Moving on, let's look at how we can make calling services as easy as a local function call. Again, here's some hypothetical syntax, calling a service that maps songs to a list of albums that song appears on:

Services.call albumService (Song "Cruel Summer")What do we need to make this happen? Well, if we're trying to avoid the programmer needing to write manual encoders and decoders of arguments and results, we will again need a way of serializing arbitrary things. Also as before, we need to be able to do so without the possibility of dependency conflicts, since the caller and the callee are at different locations and we could certainly imagine them having differing dependencies in their environment.

We've already solved both these problems in part 1, so we're going to just apply the same tricks here to provide a very nice experience for making remote calls. Here's how this shakes out in Unison:

deploy : (a -> {...} b) ->{Cloud} ServiceHash a b

call : ServiceHash a b -> a ->{Services} b

callName : ServiceName a b -> a ->{Services} b

albumService : ServiceHash Song [Album]

albumService = ...

call albumService (Song "Cruel Summer")

-- ==> [Album "Lover" "Taylor Swift"]Both our services and the service calls are also typed, so the typechecker will tell us before running any code if we've passed the wrong sort of argument. The service call is also a 1-liner, easy as a local function call.

Note also that Unison's type system tracks that this remote effect is

happening: the service call requires the

Here's a demo of this actually working:

albumService.logic s = match s with

Song "Cruel Summer" -> [Album "Lover" "Taylor Swift"]

Song s -> []

albumService : ServiceHash Song [Album]

albumService = Cloud.deploy env albumService.logic

main.logic : HttpRequest -> HttpResponse

main.logic =

albumFor _ =

name = route GET (s "album" / Parser.text)

Typed remote service call!Services.call albumService (Song name)

|> List.map Album.toJson

|> Json.array

|> ok.json

Route.run (albumFor <|> '(notFound.text "??"))

Typed remote service call!Services.call albumService (Song name)

|> List.map Album.toJson

|> Json.array

|> ok.json

Route.run (albumFor <|> '(notFound.text "??"))musicApp/main>

Before moving on, let's return to the horrifying image that started this post:

Microservicey systems like this are not just complicated to build, they're also brittle. In the world of ordinary programming, if you wish to change how your program is factored, that's easy: shuffle some code around, with a typechecker to guide the process and make sure you've wired everything up correctly following your change. Life is good.

But changing how a microservice backend is factored is… not so good. At each of those service call boundaries, there's generally a layer of encoding and decoding boilerplate. Changing how services are factored means changing where those boundaries are placed, and what values are being serialized. You often end up needing to write a different layer of transport and encoding/decoding boilerplate or shuffle existing boilerplate around, rather than the nimble freedom of refactoring code that runs on one machine.

In the past several years, there's been some backlash against microservice architectures, in part for this reason. Some orgs are toning down the "micro" in "microservice" to more coarse bundles of functionality, or even going as far as using a monolith. But building and operating a monolith is itself complicated for other reasons. It also loses out on separate deployment and scaling of services, something desired by many organizations.

In Unison, we can get the best of both worlds: the ease of use of a monolith, but all the benefits of a microservice architecture.

Part 3: Typed storage

Moving on to part 3 of the dream, imagine if the database were as easy to access as in-memory data: typechecked by the programming language, and without tedious serialization code to and from rows of SQL or JSON blobs in NoSQL stores Of course Unison also supports accessing external datastores, same as any other programming language, but we wanted our cloud platform to have a nicely typed Unison-native storage layer, too. . How do we do this?

How to

Easy typed storage

1 LOC. Store any value at all. Typechecked. No manual conversion to and from SQL rows, JSON etc.

We use the same trick as before. Just as with runtime deployment or RPC, where we needed to serialize arbitrary values without dependency conflicts, talking to storage is the same problem, really. We're serializing values of the language to the storage layer, then reading them back at a later time. We need a way of doing so without dependency conflicts, and we already know how to do that.

Here's how the API shakes out in Unison. We won't go through the details of the code here, but in short, we can declare typed tables, and access to those tables is typechecked and involves no manual serialization. The storage layer also supports transactions and is backed by highly scalable distributed cloud storage (in our current AWS region, it's backed by DynamoDB).

songToAlbum : Table Song [Album]

songToAlbum = Table "songToAlbum"

albumService.logic s =

Reading from StorageState.tryRead db songToAlbum s |> getOrElse []

albumService : ServiceHash Song [Album]

albumService = Cloud.deploy env albumService.logic

main.logic : HttpRequest -> HttpResponse

main.logic =

albumFor _ =

name = route GET (s "album" / Parser.text)

Services.call albumService (Song name)

|> List.map Album.toJson

|> Json.array

|> ok.json

Route.run (albumFor <|> '(notFound.text "??"))

Reading from StorageState.tryRead db songToAlbum s |> getOrElse []

albumService : ServiceHash Song [Album]

albumService = Cloud.deploy env albumService.logic

main.logic : HttpRequest -> HttpResponse

main.logic =

albumFor _ =

name = route GET (s "album" / Parser.text)

Services.call albumService (Song name)

|> List.map Album.toJson

|> Json.array

|> ok.json

Route.run (albumFor <|> '(notFound.text "??"))musicApp2/main>

Typed tables plus transactions are also powerful building blocks for all sorts of other interesting distributed data structures. Need a scalable sorted map? Check. A linearized event log? Check. A KNN-index for vector search? Check. And the best part: all such data structures are written with ordinary high-level Unison code, much like how you'd write code for a single machine in-memory data structure.

Why is it so complicated, anyway?

Having eliminated many of the difficulties of building software in the cloud, let's now return to our question: why's the status quo so darn complicated?

It's because of a single profound reason: our programming languages are woefully limited in what they can talk about.

What we think of as "a program" is generally a description of what a single OS process on a single computer should do. This "program as OS process" paradigm has been dominant for decades, and it was generally fine back before the internet and the cloud were a thing, when developers wrote shrink-wrapped software and stamped it on CDs and called it a day.

Current paradigm

A program describes a single OS process on one machine.

But the OS process is just a tiny little box now. Here it is: 📦. While the programming experience is quite nice when you're working within that 📦, at its borders, you have boilerplate encoding and decoding things to storage or other services. And the world is much bigger than just the one 📦. Nowadays, we're building whole systems, consisting of hundreds or thousands or millions of 📦's, spread across many different computers, all coordinating in just the right way to implement some overall behavior:

A system like this has a huge amount of information that needs to be specified, beyond the tiny little box where our programming languages have something to say.

But the sad part is, rather than expanding the beautiful and cohesive world of programming, enabling our programming languages to express the new concepts needed for modern systems, we've kept with the decades-old paradigm of "program as OS process" and compensated with yet more YAML files, duct tape, and baling wire. That's what the deluge of cloud-native technologies with all their bizarro inconsistencies and quirks are: a mess of our own making, created because we couldn't be bothered to take a step back and solve these problems properly.

A lot of the work is

'not programming'

So next time you're bashing your head against the wall, YAML engineering your CI whoosiewhatsit to be able to talk properly to the Kubernetes thingamabob, just remember: you're dealing with this complexity instead of real programming, because existing languages are too limited in what they can talk about. This isn't the future.

This is what we're solving with Unison.

Programming the cloud ought to be delightful. Come join us.